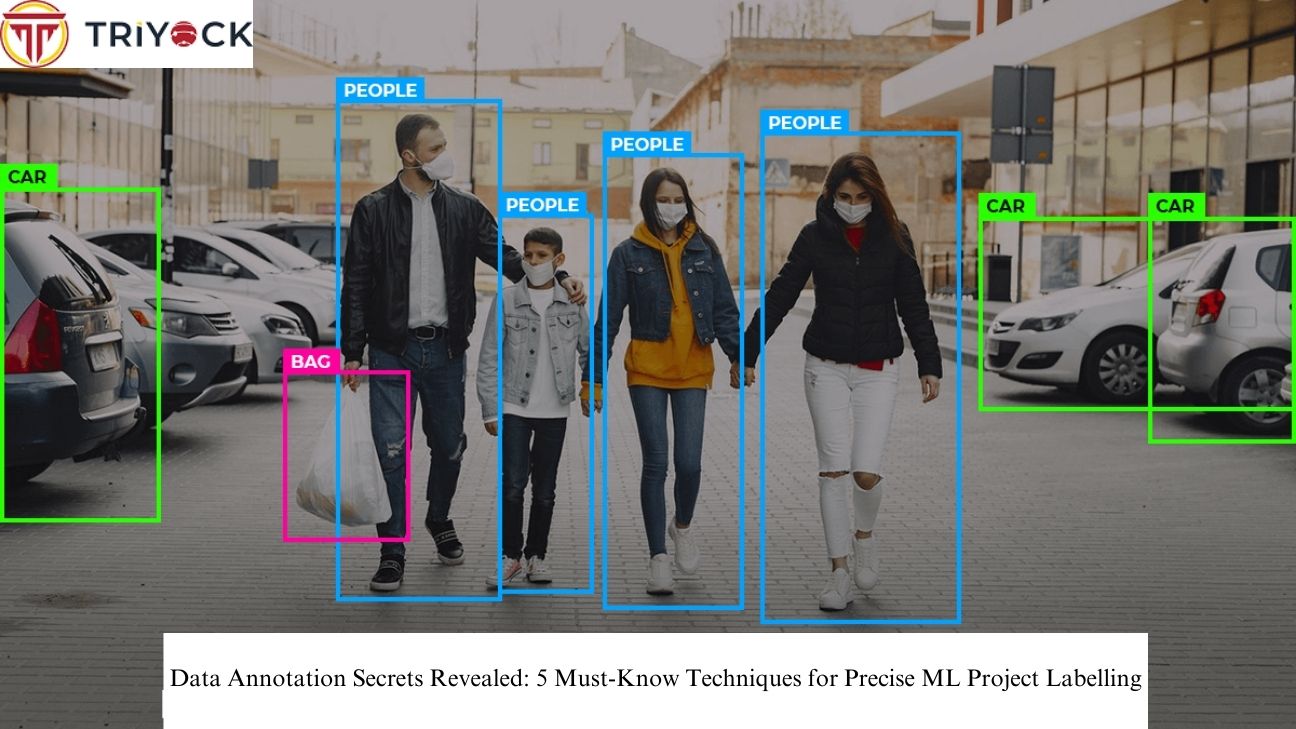

Data Annotation Secrets Revealed: 5 Must-Know Techniques for Precise ML Project Labelling

Aisha Aslam

Data Annotation Secrets Revealed: 5 Must-Know Techniques for Precise ML Project Labelling

Data annotation plays a crucial role in machine learning projects as it involves Labelling or tagging data to make it understandable for machine learning algorithms. It is the process of adding metadata or annotations to raw data, which helps train and improve machine learning models. The annotations provide a reference or ground truth for the models to learn patterns and make accurate predictions.

The Role of data annotation in training machine learning models

Here are some key aspects of data annotation in machine learning:

Training data creation: Data annotation is essential for creating training datasets. For supervised learning, where the model learns from labelled examples, annotators add annotations to raw data, such as images, text, audio, or video, to indicate relevant features or classes.

Ground truth generation: Data annotation helps establish ground truth labels for training and evaluation. Ground truth refers to the correct or desired output for a given input data point. By annotating data with accurate labels, annotators create a benchmark for training models and assessing their performance.

Model evaluation: Annotated data is also crucial for evaluating the performance of machine learning models. By comparing the model's predictions with the ground truth annotations, metrics such as accuracy, precision, recall, or F1 score can be calculated.

Manual annotation is a data annotation technique where human annotators manually label or annotate data based on predefined criteria or guidelines. It involves human judgement and expertise to assign annotations accurately. Manual annotation is commonly used for tasks like image/object recognition, sentiment analysis, text classification, and more.

Types of Data Annotation Techniques

1. Manual annotation

The process of manual annotation typically involves the following steps:

- Annotation Guidelines: Defining clear annotation guidelines is essential to ensure consistency and accuracy. These guidelines specify the annotation task, label definitions, and any specific rules or instructions for annotators to follow.

- Annotation Tool: Annotators use annotation tools or software that provide an interface to view and label the data. These tools can vary based on the data type, such as image annotation tools, text annotation tools, or audio annotation tools.

- Annotation Process: Annotators review the data examples one by one and apply the appropriate annotations based on the guidelines. The annotations can include bounding boxes, segmentations, class labels, sentiment scores, or any other relevant annotations depending on the task.

- Quality Control: To ensure high-quality annotations, various quality control mechanisms are implemented. These can include regular training sessions for annotators, inter-annotator agreement checks to measure consistency among annotators, and periodic reviews of annotated samples to address any issues or discrepancies.

Pros of Manual Annotation:

- Accuracy: Manual annotation allows for precise and accurate Labelling of data by incorporating human judgement and expertise.

- Flexibility: Manual annotation can handle complex tasks or ambiguous cases that automated methods might struggle with.

- Adaptability: Manual annotation can adapt to evolving annotation requirements and easily incorporate feedback or updates.

Cons of Manual Annotation:

- Time and Cost: Manual annotation can be time-consuming and expensive, especially for large datasets or tasks that require extensive human effort.

- Subjectivity and Bias: Different annotators may have subjective interpretations or biases, which can introduce inconsistencies or inaccuracies in the annotations.

- Scalability: Scaling manual annotation efforts to handle large datasets can be challenging and may require managing a large team of annotators.

Best Practices for Manual Annotation:

- Provide clear and detailed annotation guidelines to ensure consistency among annotators.

- Conduct regular training sessions and feedback loops to improve annotators' understanding and performance.

- Implement quality control measures, such as inter-annotator agreement checks, to assess consistency and address any discrepancies.

- Maintain open communication channels with annotators to address questions, provide clarifications, and ensure a shared understanding of the annotation task.

Automatic Annotation

It is also known as automated or algorithmic annotation, which refers to the process of generating annotations or labels for data using automated techniques, such as machine learning algorithms or pre-existing models. Instead of relying solely on human annotators, automatic annotation aims to reduce the manual effort and time required for data Labelling.

The process of automatic annotation typically involves the following steps:

- Training Data: Automatic annotation requires a training dataset that is manually annotated by human experts. This dataset serves as the basis for training the automated annotation algorithm or model.

- Feature Extraction: Automated annotation algorithms often rely on extracting relevant features from the input data. These features could be image descriptors, text embeddings, audio spectrograms, or any other representation that captures the characteristics of the data.

- Model Training: Machine learning techniques, such as supervised learning or deep learning, are used to train a model based on the annotated training data. The model learns patterns and correlations between the input data and the corresponding annotations.

- Annotation Inference: Once the model is trained, it can be applied to new, unlabelled data to automatically generate annotations. The model predicts the labels or annotations based on the patterns it has learned during training.

Pros of Automatic Annotation:

- Efficiency: Automatic annotation significantly reduces the manual effort and time required for data Labelling, making it more efficient for large datasets or tasks with frequent updates.

- Consistency: Automated annotation algorithms generate annotations consistently, without the subjective biases or inconsistencies that can arise from human annotation.

- Scalability: Automatic annotation can easily scale to handle large volumes of data without the need to recruit and manage a large team of human annotators.

Cons of Automatic Annotation:

- Accuracy: The accuracy of automatic annotation depends on the quality and representativeness of the training data. It may struggle with complex or ambiguous cases that require human judgement.

- Domain-Specificity: Automatic annotation models trained on specific domains may not generalise well to new or different domains, requiring retraining or fine-tuning for optimal performance.

- Error Propagation: Inaccuracies or errors in the training data can be propagated by the automatic annotation model, leading to incorrect annotations.

Best Practices for Automatic Annotation:

- Ensure the training dataset is of high quality and representative of the target domain to improve the accuracy of the automatic annotation.

- Regularly evaluate and validate the performance of the automatic annotation model against manually annotated samples to measure its accuracy and identify areas for improvement.

- Incorporate feedback mechanisms to iteratively refine and update the automatic annotation model based on new labelled data or user feedback.

Key Techniques for Precise ML Project Labelling

1. Semantic Segmentation

Semantic segmentation is a computer vision technique that aims to assign a semantic label (such as object class or category) to each pixel in an image. It involves segmenting an image into different regions or objects based on their semantic meaning. Semantic segmentation is commonly used in tasks such as autonomous driving, image understanding, and medical image analysis.

Here's an overview of how semantic segmentation works and how it can be applied for precise Labelling:

- Architecture: Semantic segmentation is often implemented using deep learning architectures, particularly convolutional neural networks (CNNs). CNNs are designed to extract hierarchical features from images, enabling them to capture contextual information and spatial relationships.

- Training: The training process involves providing a large dataset of labelled images, where each pixel in the image is labelled with its corresponding class or category. The CNN is trained to learn the mapping between the input image and its corresponding pixel-wise labels.

- Prediction: Once the model is trained, it can be used to predict pixel-level labels for new, unseen images. The model takes an input image as input and produces a segmentation map where each pixel is assigned a class label.

Case Studies:

- Autonomous Driving: Semantic segmentation plays a vital role in autonomous driving systems. By accurately segmenting different objects in the scene, such as roads, pedestrians, vehicles, and traffic signs, it enables self-driving vehicles to understand the environment and make informed decisions. Read More>>

- Medical Image Analysis: In medical imaging, semantic segmentation is used for various applications, such as tumour segmentation, organ segmentation, and lesion detection. By precisely Labelling different structures or abnormalities in medical images, it assists in diagnosis, treatment planning, and monitoring of diseases. Read More>>

2. Polygon Annotation

Polygon annotation is a technique used for annotating objects or regions of interest in an image using polygonal shapes. It involves manually drawing and defining the boundaries of the object or region with a series of connected vertices, forming a closed polygon.

Applications of Polygon Annotation:

- Object Detection and Recognition: Polygon annotation is commonly used in object detection tasks where the goal is to identify and localise objects in an image. By annotating the boundaries of objects with polygons, machine learning models can learn to detect and recognize these objects accurately.

- Instance Segmentation: Instance segmentation aims to segment and identify individual objects within an image. Polygon annotation provides precise Labelling of each object instance, enabling the model to distinguish between multiple objects of the same class.

- Semantic Segmentation: Polygon annotation can be used for semantic segmentation tasks as well. Instead of annotating each pixel, annotators can outline the boundaries of objects using polygons, providing a more compact representation of the object labels.

Technique for Polygon Annotation:

- Annotation Tools: Specialised annotation tools or software, such as VGG Image Annotator (VIA), Labelbox, or RectLabel, provide features for drawing polygons and defining vertices interactively.

- Drawing and Vertex Placement: Annotators typically start by placing vertices at specific locations along the object boundary, creating a polygon by connecting these vertices. The annotators may refine the polygon by adjusting vertex positions to precisely align with the object's shape.

- Object Label Assignment: Once the polygon is created, annotators assign a class label to the annotated object. This label represents the semantic category or class to which the object belongs (e.g., car, person, tree).

Use in Real-Life Machine Learning Projects:

- Polygon annotation using manually drawn polygons is widely used in various machine-learning projects. Some real-life examples include:

- Object Detection for Autonomous Vehicles: Annotating objects of interest (cars, pedestrians, traffic signs) with polygons enables accurate object detection and localization in autonomous driving systems.

- Satellite Image Analysis: Polygon annotation is utilised to label specific features on satellite images, such as buildings, roads, water bodies, or vegetation. It aids in applications like land cover mapping, urban planning, and disaster response.

- Biomedical Image Analysis: In medical imaging, polygon annotation is employed to segment and label anatomical structures, tumours, or lesions. It assists in diagnostic analysis, surgical planning, and disease monitoring.

3. 3D Bounding Box Annotation

3D bounding box annotation is a technique used in computer vision and machine learning to annotate objects in a 3D space. It involves defining the position, size, and orientation of a bounding box that tightly encloses an object in a three-dimensional coordinate system. This annotation technique is widely used in various applications, including autonomous driving, robotics, augmented reality, and object detection.

The process of 3D bounding box annotation typically involves the following steps:

- Data collection: This step involves capturing or obtaining 3D sensor data, such as point clouds from LiDAR (Light Detection and Ranging) sensors or depth maps from stereo cameras. These data provide information about the objects and their surroundings.

- Object segmentation: The next step is to segment or identify individual objects in the acquired 3D data. Various techniques, such as clustering or semantic segmentation algorithms, can be used to separate different objects.

- Bounding box definition: Once the objects are segmented, a bounding box is defined around each object. The bounding box is defined by its centre coordinates, dimensions (width, height, and depth), and orientation parameters (e.g., yaw, pitch, and roll angles).

- Annotation refinement: In some cases, additional refinement steps may be performed to improve the accuracy and precision of the bounding box annotations. This could involve adjusting the box dimensions or orientation to better align with the object's shape.

Precise 3D bounding box annotation techniques aim to achieve accurate and detailed annotations. Here are some techniques that can be employed:

- Manual annotation: Expert annotators manually draw bounding boxes around objects in 3D data using specialised annotation tools. This approach allows for precise annotations but can be time-consuming and labour-intensive.

- Semi-automatic annotation: This approach combines manual annotation with automated algorithms to assist in the annotation process. For example, an algorithm might propose initial bounding box estimates that can be refined or adjusted by human annotators.

- Machine learning-based approaches: Machine learning techniques can be employed to automate the annotation process. Training models on annotated data can help predict bounding boxes for new objects. This can significantly speed up the annotation process but requires a large amount of labelled training data.

Applications of 3D bounding box annotation include:

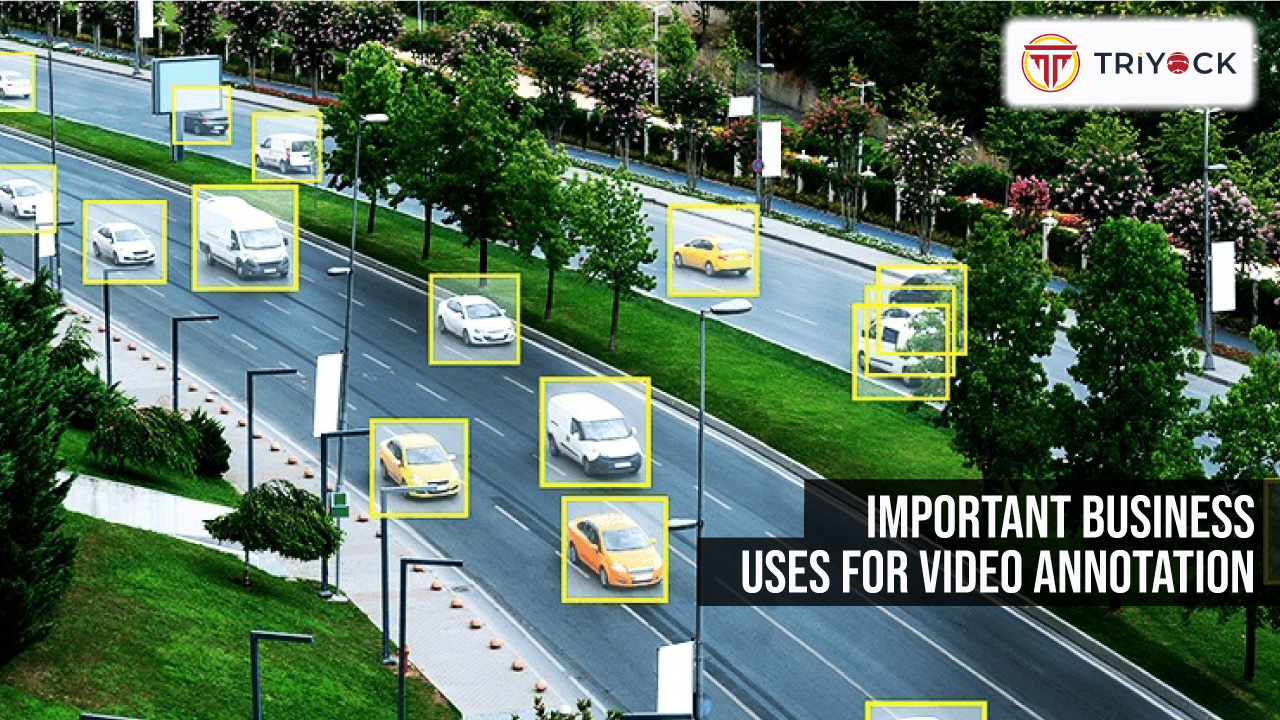

- Autonomous driving: 3D bounding box annotation is crucial for developing perception systems in self-driving cars. It helps in object detection, tracking, and collision avoidance by providing accurate information about the position, size, and orientation of surrounding vehicles, pedestrians, and other obstacles.

- Robotics: Robots equipped with depth sensors can use 3D bounding box annotations to recognize and manipulate objects in their environment. This enables robotic systems to perform tasks such as pick-and-place operations, object classification, and scene understanding.

- Augmented reality (AR): AR applications overlay virtual objects onto the real world. 3D bounding box annotation allows for the precise alignment of virtual objects with the physical environment, enhancing the realism and accuracy of AR experiences.

- Object detection and tracking: 3D bounding box annotation is widely used in computer vision for object detection and tracking in various domains, including surveillance, industrial automation, and sports analysis. It enables algorithms to identify and monitor objects in a three-dimensional space.

Overall, 3D bounding box annotation plays a vital role in enabling machines to perceive and interact with objects in the real world. It facilitates the development of robust and accurate computer vision algorithms across a wide range of applications.

4. Text Annotation

Text annotation methods are techniques used to label and annotate textual data for machine learning tasks such as natural language processing (NLP), sentiment analysis, text classification, and information extraction. These methods involve assigning specific labels or tags to different elements of the text, such as entities, sentiment, parts of speech, or semantic roles.

Here are some commonly used text annotation methods:

- Named Entity Recognition (NER): NER involves identifying and categorising named entities (e.g., person names, locations, organisations) in text. Annotators mark the boundaries of entities and assign corresponding labels.

- Sentiment Analysis: Sentiment annotation aims to determine the sentiment or opinion expressed in a given text. Annotators assign sentiment labels, such as positive, negative, or neutral, to indicate the overall sentiment of the text or specific segments within it.

- Part-of-Speech (POS) Tagging: POS tagging involves Labelling each word in a sentence with its corresponding part of speech, such as a noun, verb, adjective, or adverb. This annotation helps in analysing the grammatical structure of the text.

- Coreference Resolution: Coreference annotation is used to resolve pronouns and determine which noun phrases they refer to in a text. Annotators mark the pronouns and associate them with the appropriate antecedents.

- Semantic Role Labelling (SRL): SRL aims to identify the semantic roles played by different entities in a sentence, such as the subject, object, or agent. Annotators assign roles to the entities based on their relationships and functions in the sentence.

Accurate text annotation can be challenging due to various factors, including ambiguity, subjectivity, and context dependence. Here are some strategies to address these challenges:

- Clear Annotation Guidelines: Well-defined annotation guidelines that provide clear instructions and examples help annotators maintain consistency and reduce ambiguity.

- Iterative Annotation and Quality Assurance: Regular feedback loops and quality assurance processes ensure continuous improvement in annotation accuracy. Regular discussions, training sessions, and inter-annotator agreement assessments help identify and address potential issues.

- Annotator Training: Proper training for annotators is essential to familiarise them with the annotation task, guidelines, and domain-specific knowledge. Training sessions can include examples, practice exercises, and discussions to enhance annotation accuracy.

- Domain Expert Involvement: In complex domains, involving domain experts in the annotation process helps ensure accurate Labelling of domain-specific entities or concepts.

Proper text annotation significantly impacts machine learning projects in various ways. Here are a few case studies demonstrating the importance of accurate text annotation:

- Question Answering Systems: Text annotation plays a crucial role in training question-answering systems. Accurate annotation of question types, named entities, and answer spans helps improve the system's ability to provide relevant and correct answers.

- Chatbot Development: Chatbots rely on text annotation to understand user queries and generate appropriate responses. Proper annotation of user intents, entities, and sentiment aids in building robust and context-aware chatbot models.

- Information Extraction: Text annotation is vital for information extraction tasks, such as extracting structured data from unstructured text. Annotated entities, relationships, and events enable machine learning algorithms to identify and extract relevant information accurately.

- Sentiment Analysis for Product Reviews: Accurate sentiment annotation in product reviews helps train sentiment analysis models that can assess customer opinions and sentiment towards specific products or services. This aids businesses in understanding customer feedback and making data-driven decisions.

Quality in Data Annotation

- Quality: Control measures in data annotation are essential to ensure the accuracy and consistency of the labelled data. Here are some common quality control techniques:

- Annotation Guidelines: Clear and detailed annotation guidelines provide annotators with instructions, examples, and definitions of annotation categories. These guidelines help maintain consistency among annotators and reduce ambiguity.

- Training and Calibration: Training sessions and calibration exercises familiarise annotators with the annotation task, guidelines, and expectations. This process involves providing feedback, discussing challenging cases, and resolving doubts to align annotators' understanding and ensure consistency.

- Inter-Annotator Agreement (IAA): IAA is the measure of agreement among multiple annotators working on the same data. Calculating IAA helps identify inconsistencies and discrepancies in annotations. Disagreements can be resolved through discussions, clarifications, or additional training.

- Regular Quality Assurance: Regularly reviewing annotated data and providing feedback to annotators helps address any issues and improve annotation accuracy over time. Quality assurance can involve sampling and evaluating annotated data, comparing annotations, and providing constructive feedback to annotators.

- Consensus or Adjudication: In cases of disagreement among annotators, a consensus or adjudication process can be employed. This involves involving a third-party expert or a senior annotator to review and resolve disagreements and establish the final annotation.

Ambiguous or challenging Labelling scenarios require specific techniques to handle them effectively. Here are some approaches:

- Annotation Discussions: Establishing channels for annotators to discuss challenging cases can help clarify ambiguities and ensure consistent Labelling. Discussions can be held through meetings, forums, or online platforms, allowing annotators to share insights and seek clarifications.

- Expert Involvement: In complex domains or cases with high ambiguity, involving domain experts or experienced annotators can improve the accuracy of annotations. Their expertise can help resolve challenging Labelling scenarios and provide guidance to annotators.

- Contextual Information: Providing annotators with additional contexts, such as background information or related data, can aid in handling ambiguous scenarios. Contextual information helps annotators make informed decisions and produce more accurate annotations.

Collaborative annotation approaches leverage the collective intelligence of multiple annotators to enhance annotation accuracy. Here are a few collaborative annotation approaches:

- Crowd Annotation: Crowdsourcing platforms allow for distributed annotation tasks where multiple annotators independently label data. The majority voting or aggregating annotations from multiple annotators can be used to derive the final labels.

- Pairwise Annotation: Pairwise annotation involves assigning two annotators to independently label the same data, and then comparing and resolving discrepancies between their annotations. This approach helps identify and resolve annotation disagreements, leading to more accurate labels.

- Annotation Review and Feedback: Implementing a feedback loop between annotators and reviewers allows for iterative improvements in the annotation process. Reviewers can provide feedback on annotations, address questions, and guide annotators toward better accuracy and consistency.

- Annotator Collaboration Tools: Collaborative annotation platforms or tools facilitate communication and collaboration among annotators. These tools often include features like discussion forums, annotation review interfaces, and real-time collaboration capabilities to enhance annotation accuracy.

Conclusion

Precise data annotation is vital for ML projects, providing labelled ground truth data that trains and evaluates models. Five essential techniques include 3D bounding box annotation, semantic segmentation, NER, sentiment analysis, and POS tagging. Implementing effective annotation strategies is key to improving ML outcomes. Clear guidelines, training sessions, quality control, and collaboration enhance accuracy. Accurate annotation builds high-quality datasets, leading to reliable ML models and unlocking the potential of AI.

Frequently Asked Questions

- 1. What is the role of data annotation in machine learning?

The role of data annotation in machine learning is to label and annotate data to provide ground truth or reference information for training and evaluating machine learning models. It helps the models learn patterns, make predictions, and perform specific tasks accurately.

- 2. How does manual annotation differ from automated annotation?

Manual annotation involves human annotators manually Labelling and annotating data, often using specialised annotation tools. Automated annotation, on the other hand, uses algorithms or pre-trained models to automatically generate annotations without human intervention.

- 3. Which annotation techniques are suitable for object detection tasks?

Annotation techniques suitable for object detection tasks include bounding box annotation, where a rectangular box is drawn around the object of interest, and polygon annotation, where the object\'s outline is defined using a series of connected points.

- 4. How can semantic segmentation improve ML project labelling?

Semantic segmentation improves ML project Labelling by assigning a label to each pixel in an image, providing a detailed understanding of the object boundaries and spatial information. This enables more precise analysis and interpretation of images in computer vision tasks.

- 5. What challenges are associated with text annotation?

Challenges associated with text annotation include ambiguity of language, subjective interpretations, varying annotator expertise, and handling large volumes of text data efficiently.

- 6. Is it possible to combine manual and automated annotation methods?

Yes, it is possible to combine manual and automated annotation methods. Automated methods can be used to generate initial annotations, which are then refined or validated by human annotators, ensuring both efficiency and accuracy.

- 7. How can data annotation impact the performance of machine learning models?

Data annotation impacts the performance of machine learning models by providing labelled data for training, testing, and validating the models. High-quality annotations lead to more accurate models and better performance on the desired tasks.

- 8. What are the considerations for quality control in data annotation?

Considerations for quality control in data annotation include providing clear guidelines, inter-annotator agreement assessments, regular feedback, and training sessions, and implementing quality assurance processes to ensure consistent and accurate annotations.

- 9. Are there any industry standards for data annotation?

There are no specific industry standards for data annotation as the requirements and practices can vary across different domains, tasks, and applications. However, best practices and guidelines exist to ensure quality and consistency in annotation processes.

- 10. What are the common challenges faced during 3D bounding box annotation?

Common challenges in 3D bounding box annotation include accurately defining the position, size, and orientation of objects in a 3D space, handling occlusions and complex object shapes, and ensuring consistency among annotators in multi-view scenarios.

info@triyock.com

info@triyock.com